Go: Gossip Gloomers - An Incomplete Distributed Systems Challenge

This article has been sitting in my drafts for about four months, waiting for me to finish the challenges. Since I’ll probably never get around to write more about them, I’m sharing what I managed to accomplish so far. Hope you enjoy it :)

If you want to see the full repo: GitHub repo

Table of Contents

- Introduction

- Challenge Architecture

- Preparation

- Challenges

- Conclusion

Introduction

Recently I discovered Gossip Gloomers - a Fly.io Distributed Systems Challenge, immediately thought that it would pretty fun to give it a try — both to deepen my knowledge of distributed systems and to get some more practice with Go.

In this blog post, I will talk about how these challenges work and how I got through them.

Just a reminder: I'm not an expert in distributed systems, and this post isn't meant to be a study guide. It's simply a record of my approaches to solving each challenge and an invitation for you to solve them yourself. So, feel free to share your thoughts and suggest any improvements! :)

You can find all of my solutions in this GitHub repo.

Challenge Architecture

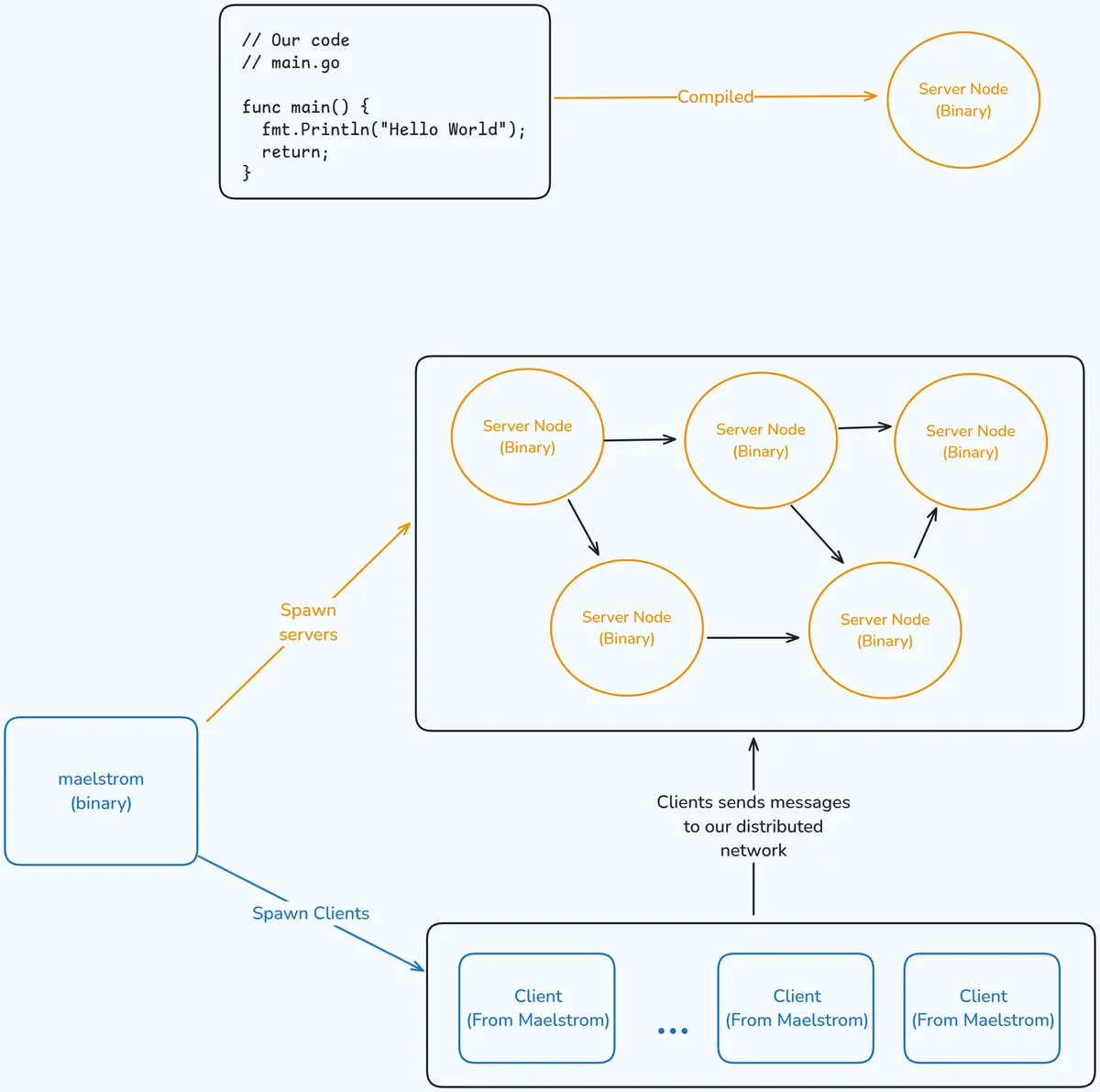

Maelstrom is a workbench for learning distributed systems by writing your own. It provides a lot of different tests suites for different type of systems, such as commutative sets or transactional key-value stores. Users can implement these patterns in their programming language of choice, and maelstrom takes care of spawning instances of the resulting binary as nodes in a distributed systems and running the tests. Below there is a diagram illustrating this interaction.

To know more details about Maelstrom, I recommend you yo read their official documentation, you can find it in GitHub.

Preparation

In my implementations, I used the Go programming language, version 1.22.5.

I also used the latest version of maelstrom. You can follow the installation guide available in the official GitHub repository.

For each solution we write for the challenge, there are a few steps we’ll always need to follow:

- Create a new Go module, for example:

mkdir maelstrom-echo cd maelstrom-echo go mod init maelstrom-echo go mod tidy

- Create a main.go file in the new directory, where the implementation will be written.

- Install the Maelstrom library for Go:

go get github.com/jepsen-io/maelstrom/demo/go

- Implement the solution.

- Compile the solution:

go install .. It will go to~/go/bin/mod_name. - Run the appropriate test for the challenge you're solving (I suggest checking the bottom of each challenge's page for the correct command). For the echo challenge, the command is:

`./maelstrom test -w echo --bin ~/go/bin/maelstrom-echo --node-count 1 --time-limit 10`

- Notice that this command depends on where your maelstrom executable is located and the name you gave to the Go module for that challenge.

Challenges

Challenge #1: Echo

So, this challenge is pretty straightforward, it's basically a warm up, so we can get used to the workflow with the maelstrom library and test suites.

The challenge states that our node will receive messages of type echo and should respond with a message of type echo_ok. There is no mystery here, I simply followed the approach suggested on the official page, to get used to the challenge format.

This was the final result, a simple handler for messages of type echo that will return the received message after changing it's type to echo_ok.

func main() {

n := maelstrom.NewNode()

n.Handle("echo", func(msg maelstrom.Message) error {

// Unmarshall the message body as an loosely-typed map.

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

body["type"] = "echo_ok"

return n.Reply(msg, body)

})

if err := n.Run(); err != nil {

log.Fatal(err)

}

}

Challenge #2: Unique ID Generation

Unique ID Generation official description.

The second challenge was about creating a globally unique ID generator. The service must be fully available, meaning it can continue to operate even in the face of network partitions.

In this test our system will receive messages of type generate:

{

"type": "generate"

}

And each node that receive a message like this, should respond with a message of type generate_ok with a globally unique ID in its body (the ID can be of any type, string, float, int, array and etc).

{

"type": "generate_ok",

"id": 123

}

The first solution I came up with was to generate a uuid4 and send it in the response to the request. This is because it would ensure that the IDs are unique and wouldn't be affected by network partitions (unlike what would happen with a global counter, for example).

Since I didn’t want to simply use a library, I took the effort to write a function to generate a "uuid4" (or whatever it is I generated), and I added that ID in the response request. You can find the complete solution here, and the relevant parts of it right here:

func randUUID() string {

// Im trusting rand.Int :X

possibleChars: = [...] byte {

'0', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z'

}

// Size 36 because: 8 dash 4 dash 4 dash 4 dash 12 (397a5719-763e-4402-aa58-c2753f80cdbd)

randomID: = make([] byte, 36)

for i: = 0;i < 36;i++{

if i == 8 || i == 13 || i == 18 || i == 23 {

randomID[i] = '-'

} else {

n, _: = rand.Int(rand.Reader, big.NewInt(int64(len(possibleChars))))

randomID[i] = possibleChars[n.Int64()]

}

}

return string(randomID[:])

}

func main() {

n: = maelstrom.NewNode()

n.Handle("generate", func(msg maelstrom.Message) error {

// Unmarshall the message body as an loosely-typed map.

var body map[string] any

if err: = json.Unmarshal(msg.Body, & body);

err != nil {

return err

}

body["type"] = "generate_ok"

body["id"] = randUUID()

return n.Reply(msg, body)

})

if err: = n.Run();err != nil {

log.Fatal(err)

}

}

And this was enough for us to pass the test :D.

Challenge #3

The third challenge is divided into 5 parts, so we can incrementally build a system that's able to gossip messages between all nodes in the cluster.

Part A: Single-Node Broadcast

Single-Node Broadcast official description.

In this part (3A), the idea was to create the base for our future Multi-Node, Fault Tolerant and Efficient Broadcast.

The requirement was that our nodes should be able to handle 3 three different message types:

broadcast: this type of message will arrive with an unique integer value within its body (in themessagefield), and the node should store this value and respond with a message of typebroadcast_ok. Below there is an example of both message types:{ "type": "broadcast", "message": 1000 } { "type": "broadcast_ok" }

read: this type of message requests that a node return all values that it has seen. And the node should do it within a message of typeread_ok. Below is an example of both message types:{ "type": "read" } { "type": "read_ok", "messages": [1, 8, 72, 25] }

topology: this type of message informs the node of who its neighboring nodes are. The node should save its neighbors internally and respond with a message with typetopology_ok.{ "type": "topology", "topology": { "n1": ["n2", "n3"], "n2": ["n1"], "n3": ["n1"] } } { "type": "topology_ok" }

With the requirements clear, the first step was to setup some global state variables, here they come:

NEIGHBORHOOD: Stores all the neighbor nodes received from thetopologymessage.messageStorage: Stores all the messages received frombroadcastmessages (and other future events), ensuring no repetition. Notice thatmessageStorageis declared with async.RWMutexto guarantee thread-safety when accessed by multiple goroutines in the future.

Also, some auxiliary functions were created to modify the state of those variables:

generateRandomMessageID(): Generates a random ID to be used as the message ID for messages sent by the node.storeMessage(messageValue int): Stores a new value in the list of values received by the node, if it hasn’t been received already.readMessages(): Returns the list of all values received by the node up to this point.

Note that these functions directly access global variables. I chose this approach because there was no intention to create multiple instances of these types. This made the code simpler and more straightforward.

var (

NEIGHBORHOOD []string = []string{}

messageStorage = struct {

sync.RWMutex

seen map[int]bool

msgs []int

}{seen: make(map[int]bool), msgs: []int{}}

)

func generateRandomMessageID() (int, error) {

idBig, err := rand.Int(rand.Reader, big.NewInt(100000))

if err != nil {

return 0, err

}

return int(idBig.Int64()) + 1, nil

}

func storeMessage(messageValue int) {

messageStorage.Lock()

defer messageStorage.Unlock()

if _, exists := messageStorage.seen[messageValue]; !exists {

messageStorage.seen[messageValue] = true

messageStorage.msgs = append(messageStorage.msgs, messageValue)

}

}

func readMessages() []int {

messageStorage.RLock()

defer messageStorage.RUnlock()

copiedMsgs := make([]int, len(messageStorage.msgs))

copy(copiedMsgs, messageStorage.msgs)

return copiedMsgs

}

After creating the utility part, we then created the functions to handle each type of message event and used them in our main function.

func main() {

n := maelstrom.NewNode()

n.Handle("broadcast", func(msg maelstrom.Message) error {

return operations.HandleBroadcast(n, msg)

})

n.Handle("read", func(msg maelstrom.Message) error {

return operations.HandleRead(n, msg)

})

n.Handle("topology", func(msg maelstrom.Message) error {

return operations.HandleTopology(n, msg)

})

if err := n.Run(); err != nil {

log.Fatal(err)

}

}

In the case of broadcast messages, the handler function simply stores the received value in our global state variable messageStorage. The process follows these steps: first, we perform a JSON unmarshalling; then, we extract the value from the body, convert it to an integer, and store it inside messageStorage (if it hasn’t been stored already). Finally, we send a response of type broadcast_ok.

From this point forward, since unmarshalling and value extraction are common steps in most message types, I will not go into as much detail when describing them in the following explanations.

func HandleBroadcast(n *maelstrom.Node, msg maelstrom.Message) error {

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

// Extract the value

value := body["message"]

v, ok := value.(float64)

if !ok {

return fmt.Errorf("broadcast: value type assertion failed")

}

messageValue := int(v)

storeMessage(messageValue)

response := map[string]any{

"type": "broadcast_ok",

"msg_id": body["msg_id"],

}

return n.Reply(msg, response)

}

For the read message handler function, the process is quite simple. Using our helper function readMessages, we gather all the values the node has received so far and send them in a read_ok message, formatted as a list.

func HandleRead(n *maelstrom.Node, msg maelstrom.Message) error {

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

messagesCopy := readMessages()

response_body := map[string]any{

"type": "read_ok",

"messages": messagesCopy,

"msg_id": body["msg_id"],

}

return n.Reply(msg, response_body)

}

Finally, for handling topology messages, the responsible function will extract the topology field from the received message, search for the current node's neighbors within this structure, and store the neighbors in our state variable NEIGHBORHOOD. If you need, you can recheck the topology message structure above in the text.

func HandleTopology(n *maelstrom.Node, msg maelstrom.Message) error {

// Unmarshall the message body as an loosely-typed map.

var body map[string]any

if err := json.Unmarshal(msg.Body, &body); err != nil {

return err

}

response := map[string]any{

"type": "topology_ok",

"msg_id": body["msg_id"],

}

topology, ok := body["topology"].(map[string]any)

if !ok {

return fmt.Errorf("topology type assertion failed")

}

if neighbors, exists := topology[n.ID()]; exists {

neighborsSlice, ok := neighbors.([]any)

if !ok {

return fmt.Errorf("neighbors type assertion failed")

}

temp := make([]string, len(neighborsSlice))

for i, v := range neighborsSlice {

id, ok := v.(string)

if !ok {

return fmt.Errorf("failed to convert neighbor to string")

}

temp[i] = id

}

NEIGHBORHOOD = temp

}

return n.Reply(msg, response)

}

With that done, our program passes the tests for challenge 3a.

My implementation ends here, and I don’t promise to continue working on it.

Part B: Multi-Node Broadcast

Multi-Node Broadcast official description.

Part C: Fault Tolerant Broadcast

Fault Tolerant Broadcast official description.

Part D: Efficient Broadcast, Part I

Efficient Broadcast, Part I official description.

Part E: Efficient Broadcast, Part II

Efficient Broadcast, Part II official description.

Challenge #4: Grow-Only Counter

Grow-Only Counter official description.

Challenge #5:

Part A: Single-Node Kafka-Style Log

Single-Node Kafka-Style Log official description.

Part B: Multi-Node Kafka-Style Log

Multi-Node Kafka-Style Log official description.

Part C: Efficient Kafka-Style Log

Efficient Kafka-Style Log official description.

Challenge #6:

Part A: Single-Node, Totally-Available Transactions

Single-Node, Totally-Available Transactions official description.

Part B: Totally-Available, Read Uncommitted Transactions

Totally-Available, Read Uncommitted Transactions official description.

Part C: Totally-Available, Read Committed Transactions

Totally-Available, Read Committed Transactions official description.